An ever-growing number of Internet of Things (IoT) devices generate vast amounts of data that can be represented as

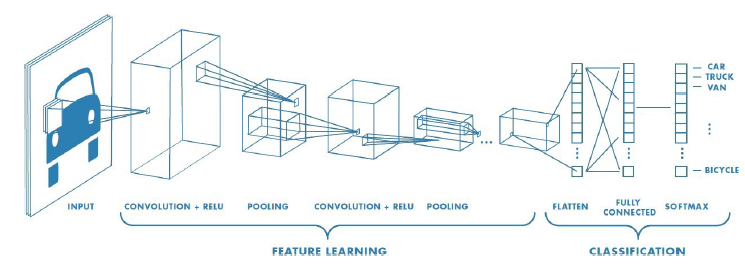

spectrograms. Spectrograms display the intensity of frequencies of a signal over time in a single image. The focus of this research is to investigate the suitability of performing deep learning on spectrogram images to tackle some of the problems that current other methods face in audio classification and earthquake prediction. To achieve this goal of solving the current issues of other methods, the type of network used is the Convolutional Neural Network (CNN) that a significant number of state-of-the-art image analysis solutions use. Transfer learning is performed on three pre-trained CNNs, and another CNN is designed and trained from scratch. Experiments are conducted to check the influence of spectrogram parameters, to verify whether data augmentation is viable for spectrograms and to see if combining different inputs is beneficial for the final evaluation score. Results show that the choice of parameters for spectrogram creation can have an impact on the classification score, with the best achieved F1-score being 0.77 on several different combinations of parameters. Data augmentation is found to have a significant impact on the classification score. When using transfer learning and data augmentation, the best model achieves an F1-score of 0.83. Combining different inputs has not been found to achieve a better score in the experiments of this study. This lack of improvement has to be evaluated in further research as the results do not get to the performance of methods used by other researchers. In conclusion, spectrograms can serve as an input to CNNs that researchers created for other purposes, especially in audio classification tasks.

Click here for the Paper and the Poster

Guido van Straaten