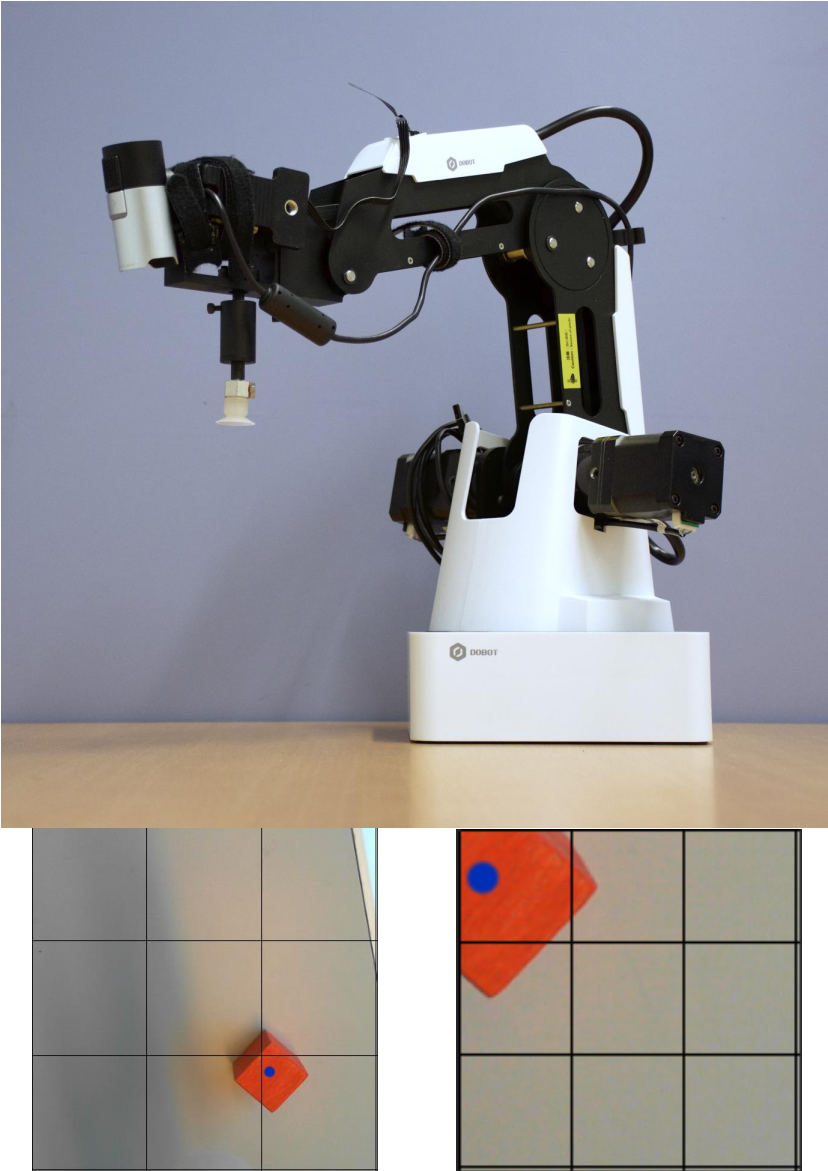

Robotic arms have been increasingly used in many manufacturing industries. While there are many benefits to these machines, there are also many disadvantages. One disadvantage is the lack understanding of their environment. Deep reinforcement learning can help a robot to understand its surroundings. In this paper we demonstrate that deep reinforcement learning for maze solving can be applied to make a robotic arm grab blocks. We have applied multiple techniques to move the arm into the direction of a block. One of which is a CNN that is trained to hover over a block, by outputting actions derived from an image captured by a webcam mounted on top of the robotic arm. This paper reveals that there are performance differences by training the whole neural network with different color spaces. These experiments are done with two neural networks. One of these is our own neural network that is created and trained from scratch. The second one is a pre-trained network called YOLO, where we only train the last layers. It also shows that DQN is 2.4 times faster than imitation learning. Our results show that there is performance difference in using different color spaces in some cases. We introduce a new technique to turn a frame into multiple tiny mazes. These tiny mazes are easy for the deep reinforcement learning algorithm to interpret and have a great benefit on the training speed.