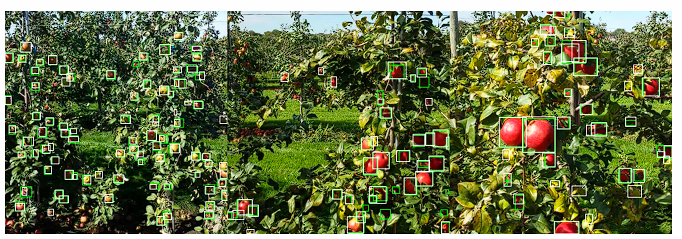

This study explores the challenge of acquiring large amounts of training data for deep object detection models. We propose a framework for generating synthetic datasets by fine-tuning pretrained stable diffusion models. These datasets are manually annotated and used to train object detection models, which are then compared to a baseline model trained on real-world images. Results show that models trained on synthetic data perform similarly to the baseline, highlighting the potential of synthetic data generation as an alternative to extensive data collection for deep model training.